publications

2024

-

View-centric CAD Reconstruction (under review, title pending)James Noeckel, Benjamin Jones, Brian Curless, and 1 more authorDec 2024

View-centric CAD Reconstruction (under review, title pending)James Noeckel, Benjamin Jones, Brian Curless, and 1 more authorDec 2024

2023

-

B-rep Matching for Collaborating Across CAD SystemsBenjamin Jones, James Noeckel, Milin Kodnongbua, and 2 more authorsACM Trans. Graph., Jul 2023

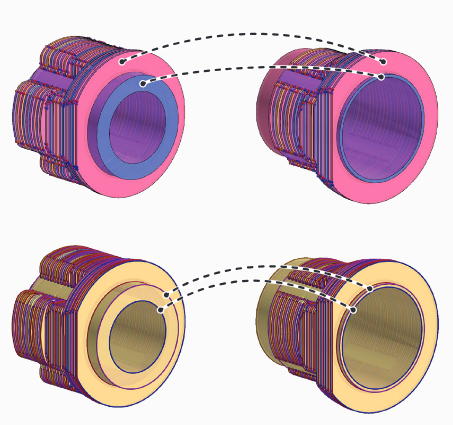

B-rep Matching for Collaborating Across CAD SystemsBenjamin Jones, James Noeckel, Milin Kodnongbua, and 2 more authorsACM Trans. Graph., Jul 2023Large Computer-Aided Design (CAD) projects usually require collaboration across many different CAD systems as well as applications that interoperate with them for manufacturing, visualization, or simulation. A fundamental barrier to such collaborations is the ability to refer to parts of the geometry (such as a specific face) robustly under geometric and/or topological changes to the model. Persistent referencing schemes are a fundamental aspect of most CAD tools, but models that are shared across systems cannot generally make use of these internal referencing mechanisms, creating a challenge for collaboration. In this work, we address this issue by developing a novel learning-based algorithm that can automatically find correspondences between two CAD models using the standard representation used for sharing models across CAD systems: the Boundary-Representation (B-rep). Because our method works directly on B-reps it can be generalized across different CAD applications enabling collaboration.

2022

-

Mates2Motion: Learning How Mechanical CAD Assemblies WorkJames Noeckel, Benjamin T. Jones, Karl Willis, and 2 more authorsAug 2022

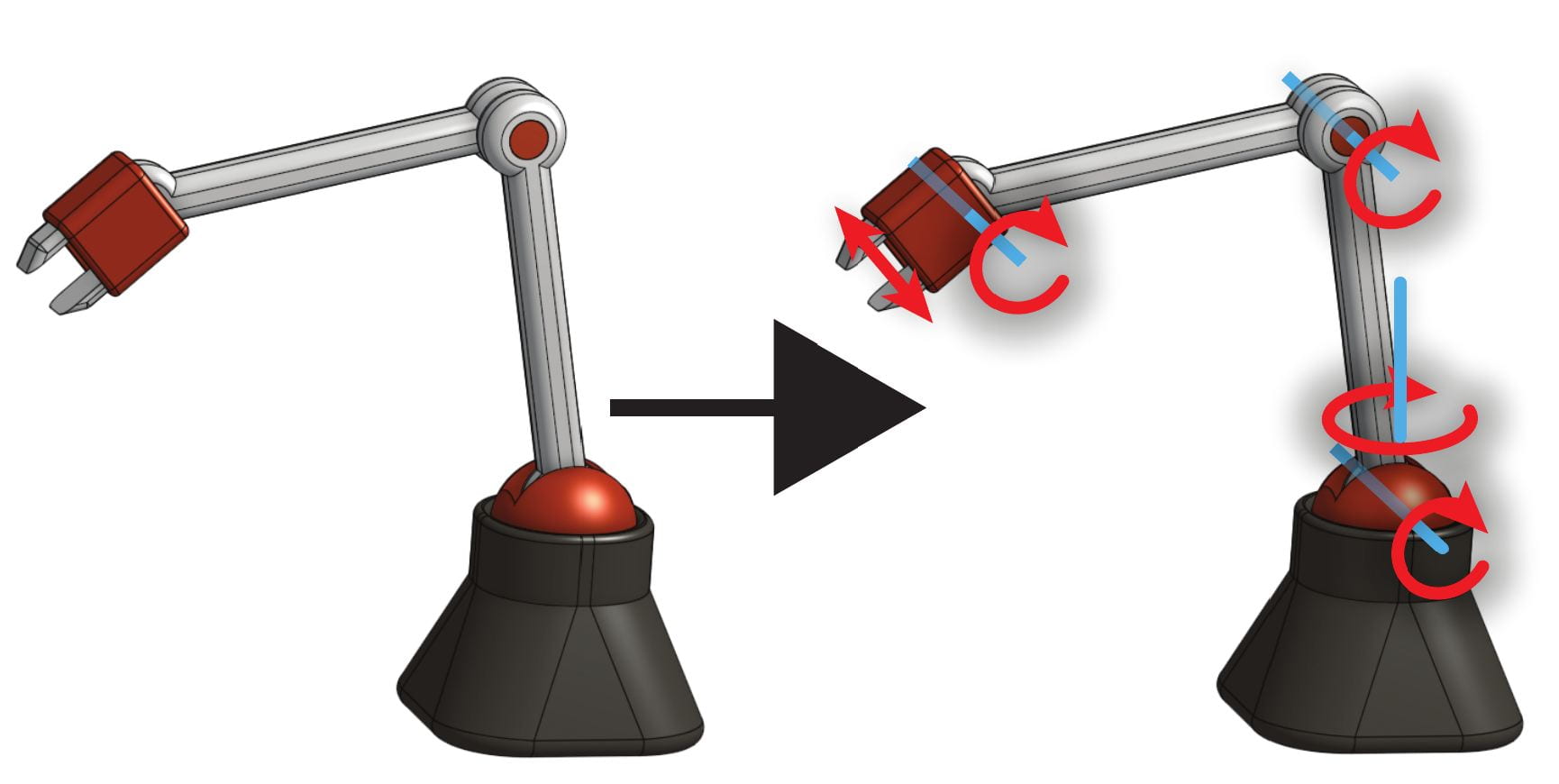

Mates2Motion: Learning How Mechanical CAD Assemblies WorkJames Noeckel, Benjamin T. Jones, Karl Willis, and 2 more authorsAug 2022We describe our work on inferring the degrees of freedom between mated parts in mechanical assemblies using deep learning on CAD representations. We train our model using a large dataset of real-world mechanical assemblies consisting of CAD parts and mates joining them together. We present methods for re-defining these mates to make them better reflect the motion of the assembly, as well as narrowing down the possible axes of motion. We also conduct a user study to create a motion-annotated test set with more reliable labels.

2021

-

Fabrication‐Aware Reverse Engineering for CarpentryJames Noeckel, Haisen Zhao, Brian Curless, and 1 more authorComputer Graphics Forum, Aug 2021

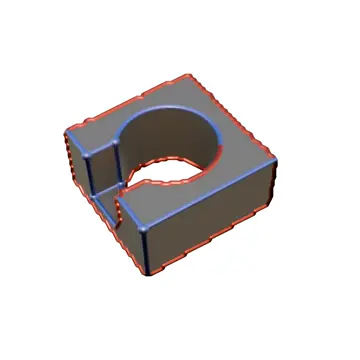

Fabrication‐Aware Reverse Engineering for CarpentryJames Noeckel, Haisen Zhao, Brian Curless, and 1 more authorComputer Graphics Forum, Aug 2021We propose a novel method to generate fabrication blueprints from images of carpentered items. While 3D reconstruction from images is a well-studied problem, typical approaches produce representations that are ill-suited for computer-aided design and fabrication applications. Our key insight is that fabrication processes define and constrain the design space for carpentered objects, and can be leveraged to develop novel reconstruction methods. Our method makes use of domain-specific constraints to recover not just valid geometry, but a semantically valid assembly of parts, using a combination of image-based and geometric optimization techniques. We demonstrate our method on a variety of wooden objects and furniture, and show that we can automatically obtain designs that are both easy to edit and accurate recreations of the ground truth. We further illustrate how our method can be used to fabricate a physical replica of the captured object as well as a customized version, which can be produced by directly editing the reconstructed model in CAD software.

2017

-

Fast rendering of fabric micro-appearance models under directional and spherical gaussian lightsPramook Khungurn, Rundong Wu, James Noeckel, and 2 more authorsACM Trans. Graph., Nov 2017

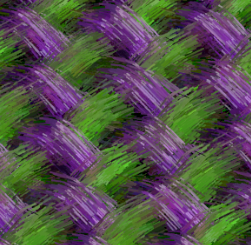

Fast rendering of fabric micro-appearance models under directional and spherical gaussian lightsPramook Khungurn, Rundong Wu, James Noeckel, and 2 more authorsACM Trans. Graph., Nov 2017Rendering fabrics using micro-appearance models—fiber-level microgeometry coupled with a fiber scattering model—can take hours per frame. We present a fast, precomputation-based algorithm for rendering both single and multiple scattering in fabrics with repeating structure illuminated by directional and spherical Gaussian lights.Precomputed light transport (PRT) is well established but challenging to apply directly to cloth. This paper shows how to decompose the problem and pick the right approximations to achieve very high accuracy, with significant performance gains over path tracing. We treat single and multiple scattering separately and approximate local multiple scattering using precomputed transfer functions represented in spherical harmonics. We handle shadowing between fibers with precomputed per-fiber-segment visibility functions, using two different representations to separately deal with low and high frequency spherical Gaussian lights.Our algorithm is designed for GPU performance and high visual quality. Compared to existing PRT methods, it is more accurate. In tens of seconds on a commodity GPU, it renders high-quality supersampled images that take path tracing tens of minutes on a compute cluster.